Efficacy Study: A Research-based Personal Development Companion for Students

- The Rflect app demonstrated a more positive impact on 5 out of 11 tested competences compared to a control group, specifically enhancing self-reflection, purpose: self-transcendence, goal orientation, system thinking, and trust.

- Qualitative analysis indicated that study group participants using Rflect were more engaged and reflective.

- Study group participants shared richer and more diverse insights compared to the control group, which generally perceived less change in their development.

- Rflect’s influence appears to foster ongoing personal development by encouraging regular reflective practices, which were associated with increased self-awareness, optimism, and goal setting among the students.

- Students in the control group reported that simply answering the assessment questions triggered them to reflect.

Executive Summary

The objective of this efficacy study was to further evaluate the perceived improvement in skills development among student users of the Rflect app during the spring semester of 2024. This follows a preliminary study1 carried out during the autumn semester of 2023, which confirmed that Rflect has had a positive impact on student users’ skills development and their ability to reflect. The analysis involved assessing the mean average score changes between pre- and post-surveys across 11 competences inspired by the Inner Development Goals2, as well as examining qualitative responses based on qualitative content analysis techniques. The quantitative results demonstrated that Rflect has had a more positive impact on 5 out of 11 competences compared to the control group, which did not use Rflect during the same period. Similarly, the qualitative responses from the participants in the study group were in general more reflected and diverse in terms of perceived changes than those of the control group. This study serves as an additional step towards making Rflect more evidence-based and will be followed by further studies aimed at iterating Rflect’s scientific base by evolving its samples, data collection design and significance, and ultimately its scientific rigour. The results should therefore be interpreted with cautious optimism.

Acknowledgements

Written by Prof. Dr. Anna Jasinenko3 (Assistant Prof. Organizational Behavior at the University of St. Gallen4) and Michael Ohlinger (PhD candidate at the University of St. Gallen), who carried out the quantitative analysis, and Dr. Monica Barroso5 (Senior Researcher, Lecturer and Project Manager, University of St. Gallen), responsible for the qualitative analysis, in collaboration with Ella Stadler-Stuart6 and Niels Rot7 (Rflect8).

Background

In recent years, the emphasis on personal development of competences and reflective practices in education has intensified, alongside the development of sustainability-related leadership skills. Aiming to accelerate this shift in higher education, the Swiss edtech startup Rflect built a web application in 2023 to help universities foster a lifelong habit of inner development in their students. The Rflect approach is a unique solution that enables students to personalize and reflect on their learning journeys in real-time, while also enabling students and lecturers to track user progress on competences such as self-awareness, critical thinking, empathy, and others communicated by the Inner Development Goals (IDGs) framework.

Research Approach and Methodology

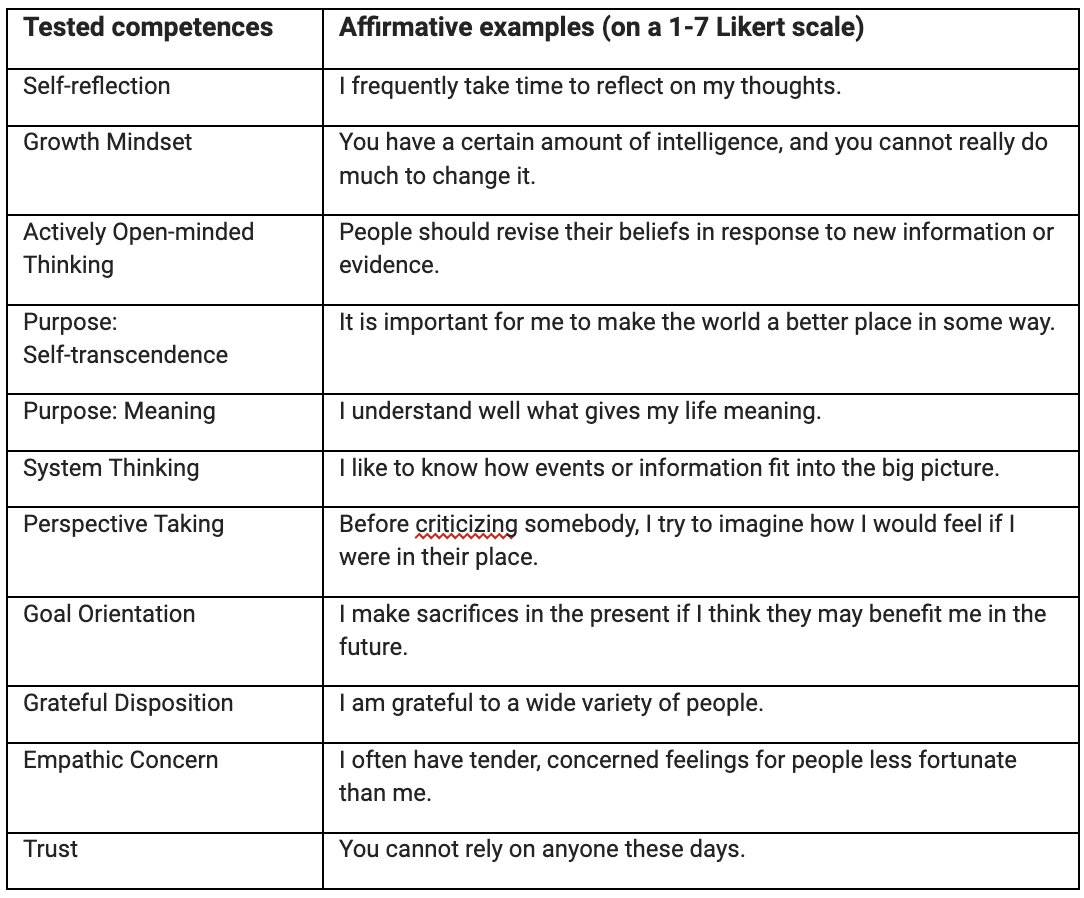

This report presents the results of an efficacy study conducted during the spring semester of 2024, aimed at evaluating the effectiveness of Rflect in enhancing student competence development over one academic semester. By comparing pre- and post-survey results of courses that used Rflect (study group) versus courses that did not use Rflect (control group) and analyzing qualitative feedback (Mayring, 2019), this study seeks to understand the potential impact of Rflect on various dimensions of personal development. The findings from this study also benefited from foundational insights from a preliminary self-assessment carried out during the autumn semester of 2023. Following the preliminary assessment, a more robust study was designed and implemented during the spring semester of 2024. Student users of Rflect were invited to complete pre- and post-assessment surveys starting with quantitative questions using a 1-7-point Likert scale. Each survey took approximately 15 minutes to complete. Between the pre- and post-surveys, students in the study group engaged in their regular curricula while using the Rflect app to reflect on and learn from their experiences, while students in the control group did not use Rflect. Upon completing the post-survey, they were then asked to write meta-reflections on their comparative results from the two surveys. Below (Figure 1) are question examples for each of the tested competences from the spring semester 2024 surveys:

Figure 1: Examples of affirmative items for each of the tested competences.

Figure 1: Examples of affirmative items for each of the tested competences.

In addition to the quantitative survey, comparative qualitative questions were part of the post-survey, for example:

- What are you learning or becoming more aware of about yourself after completing this survey? Were there any surprises or unexpected insights?

- When you reflect on your answers to this survey again in 6 months, what do you hope you have learned, changed or accomplished? What aspects or skills are you eager to explore or enhance moving forward?

- In what aspects or specific skills do you personally feel you have grown or changed the most since your pre-assessment? Give 1-3 examples. Is this visible in your results?

Sample Selection

The survey respondents consisted of 119 students enrolled in 6 different higher education programs in German-speaking Switzerland as well as one English-speaking program in the Netherlands during the European spring semester of 2024. These programs included Certificates of Advanced Studies (further education), as well as modules/courses within Bachelor or Master programs. In sum, a total of 94 students, of which 46 were part of the study group, and 48 of the control group, completed both the pre- and post-surveys and these were evaluated for this paper.

Development of Self-Assessment Survey

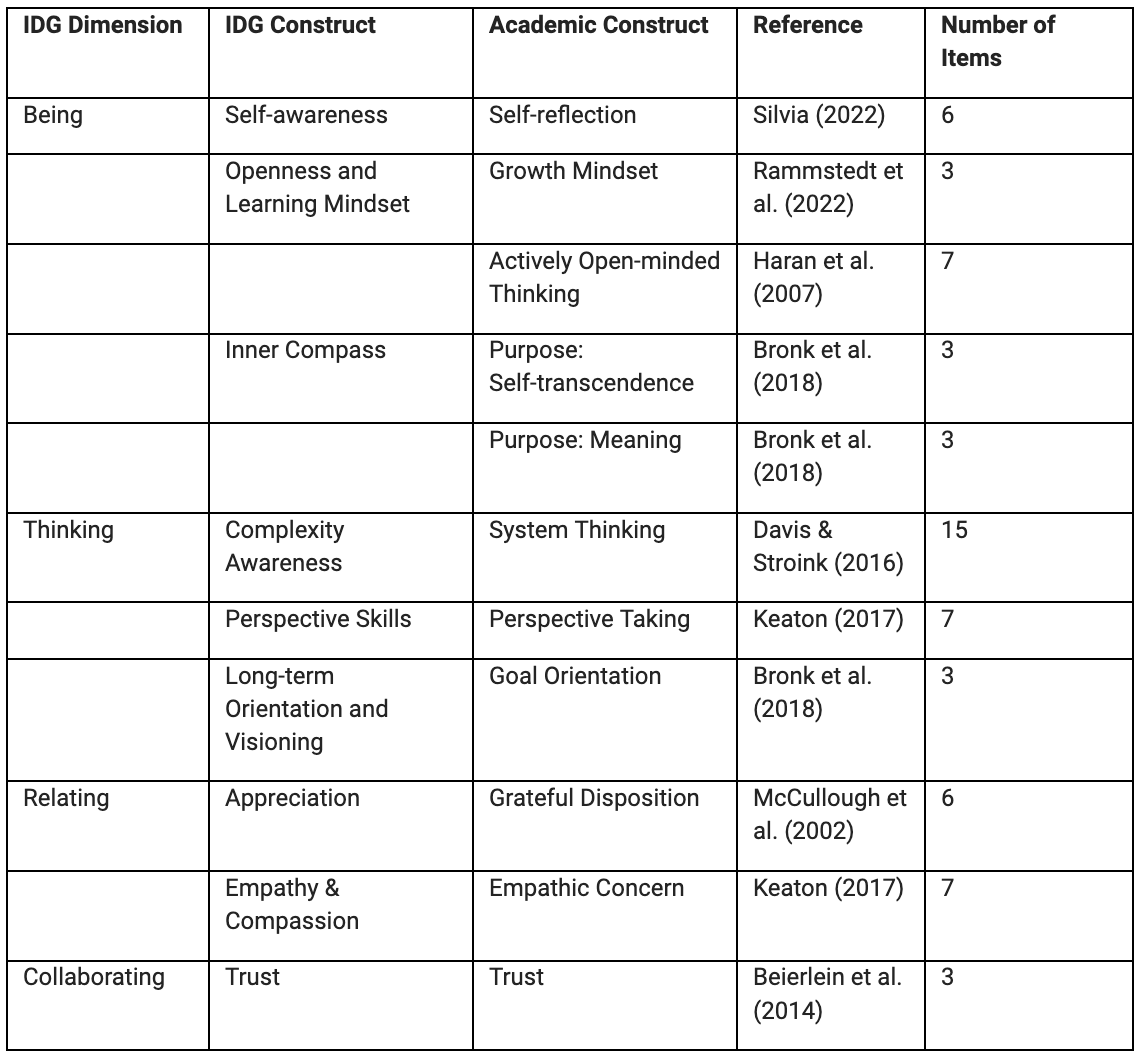

We reviewed the literature to identify validated and peer-reviewed measurement scales for competences represented in the IDGs (see Figure 2).

Overview of Scales:

Figure 2: Overview of assessment scales, developed by Prof. Dr. Anna Jasinenko

Figure 2: Overview of assessment scales, developed by Prof. Dr. Anna Jasinenko

During the pre- and post-surveys, participants were asked to rate the item using a 1-7 Likert scale with labels: (1) Strongly Disagree (2) Disagree (3) Somewhat Disagree (4) Neither Agree Nor Disagree (5) Somewhat Agree (6) Agree (7) Strongly Agree.

Key Quantitative Findings

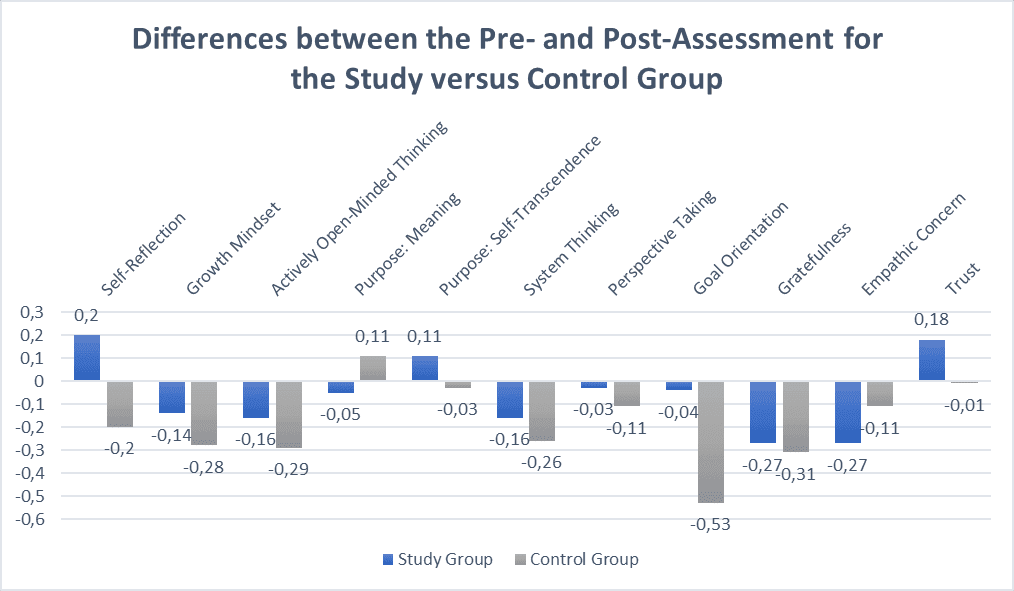

To analyse the difference in the pre-post development of the study versus control group, we conducted analyses of covariance (ANCOVA) for each of the 11 measured competences, controlling for random baseline differences i.e. differences in the pre-assessment in the different courses. The results reveal that the study group had at least a marginally significant more positive development compared to the control group in the following five competences:

- Self-reflection (p=.09)

- Purpose: Self-transcendence (p=.10)

- Goal Orientation (p<.01)

- System Thinking (p=.09)

- Trust (p=.01)

On the other hand, the control group showed a more positive development in none of the competences compared to the study group. Overall, we can conclude that the application of Rflect led to a better development of five out of eleven tested competences.

We explain the positive development in self-reflection with the clear focus of Rflect on self-reflection exercises. A more positive development of a self-transcendental purpose could be due to the fact that many reflection exercises focus beyond the self and inspire students to reflect on the relation of the self to the world around them. Many reflection exercises also involve reflecting on personal goals, which explains the positive effect of Rflect on goal orientation. The reflections also motivated the students to reflect on topics from different angles, which explains the positive effect of Rflect on system thinking. The positive effect of Rflect on trust can be based on Rflect’s peer-coaching exercises, which help the students to collaborate and build trust with each other and, thereby, to develop a higher general trust in others.

Interestingly, only the competences self-reflection, self-transcendence, and trust showed a positive development (i.e., higher score in the post- than in the pre-test), while goal orientation remained almost the same and system thinking developed in the negative direction, but this development was less negative than in the control group (Figure 3 shows the developments of the study and the control group). In comparison, all eleven competences in the control group showed a negative development. This could be explained by the strict demands to focus on specific course topics in order to excel in exams, which leaves little room for “non-graded” personal development of “human skills” emphasized by the IDGs. A further hypothesis is that students at the beginning of the semester, when the pre-survey was done, are more optimistic about how they rate their competences. Towards the end of the semester however, the intensive workload and limited dedicated time to work on their personal development might have made them more self-critical. In turn, Rflect seems to help to reduce or even reverse this negative development for several important competencies by supporting students to understand that personal development is an ongoing practice.

Figure 3: Mean Improvement Scores by Competence Dimension by Rflect users (study group) and non-users (control group).

Figure 3: Mean Improvement Scores by Competence Dimension by Rflect users (study group) and non-users (control group).

Key Qualitative Findings

Upon completing the quantitative questions in the post-survey, students were able to view their comparative results and asked to write meta-reflections. Qualitative responses after completion of the post-assessment enhance the quantitative results, in particular those to the comparative questions: “What are you learning or becoming more aware of about yourself after completing this assessment? Were there any surprises or unexpected insights?” (Q1) and “In what aspects or specific skills do you personally feel you have grown or changed the most since your pre-assessment?” (Q2)

As a general finding, while the responses from the study group participants were more extensive, reflected and diverse in terms of perceived changes, a significant portion of participants from the control group stated that they have not perceived much change between the pre- and post-assessment. Below are some quotes that illustrate this difference, which triangulates the quantitative results.

Study Group

The overall analysis of the qualitative responses revealed that the study group participants benefited from using Rflect during the semester, as the selected quotes below illustrate:

“I observed a bit of cognitive dissonance in my own thinking - there is a way how I would like to perceive the world and act within it, and then there is reality. The two layers are not always congruent.”

“A surprise: I think I’m becoming less trustful, more cautious about what I’m hearing. I’m growing more critical and learning to make my own opinions.”

“After completing this survey, I realized I’m more reflective and self-aware than I initially thought. I found it surprising how much I value introspection and personal growth.”

“After completing this survey, I realized I thrive in collaborative environments and need to seek more teamwork opportunities. It also highlighted my need to improve time management skills.”

“From this survey I realized that I am grateful for many things in life and always want to help others. Not everyone has the same privileges as me.”

“Completing this survey made me more aware of my evolving interests and strengths. I was surprised by how much my priorities have shifted compared to previous years.”

“After completing this survey, I am learning that I have a deep inner awareness of my own consciousness and emotions. It has provided some unexpected insights into how I experience and process my subjective feelings and state of being.”

“I think I have changed a lot since I was a child, and it seems that I’ve become a bit more negative/pessimistic and cynical about the world. I am not pleased with this trend over time, but it’s enlightening to be aware about it so that I can do something to combat or understand why it is happening.”

Control Group

Overall, a significant number of responses showed no or little perceived changes between the pre- and post-surveys, as the selected quotes below demonstrate:

“No surprises or unexpected insights. Unsure what I have learned about myself. Need time to reflect.”

“Answers were pretty similar to the first survey, I have not changed much.”

“I almost never take the time to ask myself these questions.”

However, a few responses showed that just the fact of responding to the pre- and post-surveys triggered some reflection:

“I am surprised that some questions concentrated on the points with which I am struggling the most at the moment, which are faith in the future in general, the people, and myself. I often think about the majority of the questions asked here, and it was fun and interesting to answer them and get to know myself in depth. It was surprising to see the importance of these topics since, until yesterday, I thought there were not so many people stressing out about them.”

“It is good to be asked those questions in order to stay aware and be more present and grateful about life. There were no big surprises but a lot of things that I forgot to think about especially because I feel very stressed at the moment.”

“I learned that I care a lot about other people and try to put myself in their shoes before judging them.”

Qualitative Content Analysis

This contrast between the study and the control group was further validated through a qualitative content analysis of the responses based on two content analytical techniques, namely inductive category formation (categories extracted from the responses), and deductive category application (coding based on the tested competences or perception ranking). For example, the content analysis based on the coded responses from the study group for Q19 indicates a rate of 48% of high perception and 27% of low perception of personal growth, while the same analysis of the control group resulted in a 20% of high perception and 41% of low perception of personal growth.

Regarding the qualitative responses to Q2[^11], the study group scored higher than the control group in all perceived skills developed during the semester in terms of length and frequency, confirming the quantitative results. The positive development in self-reflection could also be observed as the most frequently mentioned competence in both groups. When rating all tested competences within the study group, 22% of a total of 97 coded passages account for improvements in self-reflection, followed by empathic concern, trust, perspective taking and system thinking. The same analysis for the control group shows a rate of 35% of a total of 31 coded passages related to self-reflection, followed by goal orientation, trust and actively open-minded thinking. When comparing the rates, this can be interpreted as a surprising result given that the quantitative results show higher scores for the study group for the same competence. Possible explanations can lie on the significant lower number of coded passages for each group (as mentioned above) and a potential sample bias due to the length and depth of answers, with many semi-blank or general responses, showing that those who answered more seriously are those who perceived improvements, in particular on self-reflection skills.

Exposure to Rflect and Engagement in Survey

This hypothesis can be further explored when analysing the difference of length of the written responses of both groups. While the study group’s responses accounted for 1537 (Q1) and 2098 (Q2) words, the responses to the same questions by the control group participants accounted for 977 (Q1) and 436 (Q2), considering that the sample size of the control group is slightly higher (48) than the study group (46). For a more accurate result, we triangulated this data according to the mean length of responses per respondent, according to which the study group respondents wrote on average 33 words/respondent for Q1, and 46 words/respondent for Q2. In contrast, the control group respondents show a mean of 20 words/respondent for Q1 and 9 words/respondent for Q2. This additional analysis indicates a different quality of engagement with the post-assessment survey, possibly due to the use of Rflect by the study group during the semester, which may have provided a favourable context for self-reflective practices.

While the study group was kept engaged with reflective practices on a regular basis through the Rflect app throughout the semester, the control group engaged in their academic and work responsibilities after the pre-survey, which also can partially explain a higher frequency rate of responses related to perceived improvements in goal orientation skills within the control group (16% against 6% when compared to the study group), answers from a more cognitive than emotional perspective, more pessimistic responses, and as a result less time dedicated to more reflective responses. Below are a few selected responses to illustrate the hypothesis that exposure to Rflect during the semester might have had a positive effect on students’ personal development and self-reflection capacities:

“Rflect helped me to reflect for at least one short moment a week, which helped me in my everyday life.” (Study Group)

“It especially helped me to take the time to reflect on my work and behaviour via Rflect to become more self-confident and get structure through the coaching sessions.” (Study Group)

“It is good to be asked those questions in order to stay aware and be more present and grateful about life. There were no big surprises but a lot of things that I forgot to think about especially because I feel very stressed at the moment.” (Control Group)

“I am a bit more pessimistic than I would like to be.” (Control Group)

Future Outlook

Evolving Students’ Personal Development Path

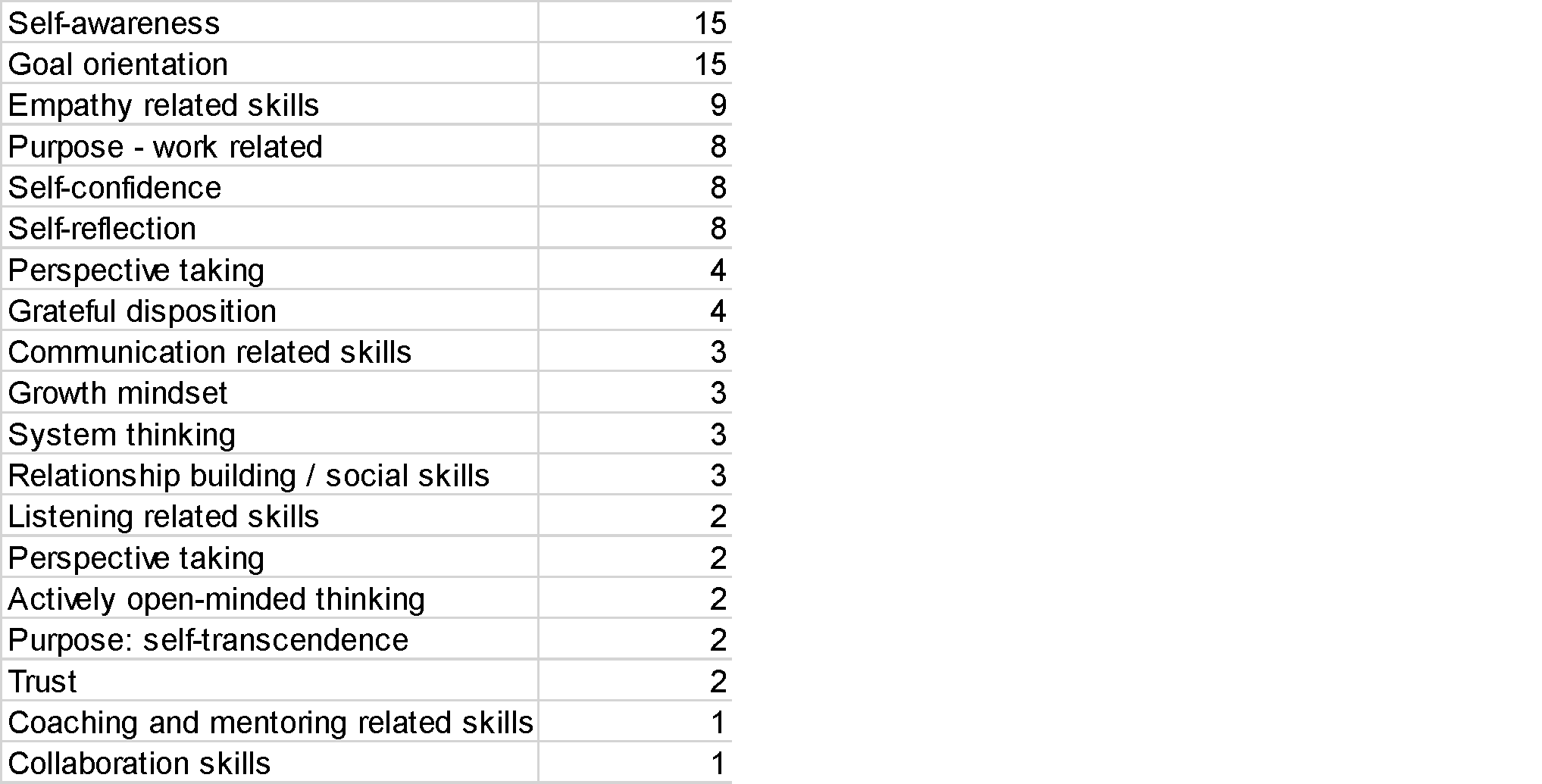

An additional qualitative analysis was carried out in terms of the outlook into the future for both groups, based on the responses to the question “When you reflect on your answers to this survey again in 6 months, what do you hope you have learned, changed, or accomplished? What aspects or skills are you eager to explore or enhance moving forward?” The analysis of the responses to the above-mentioned question by the study group participants resulted in 95 coded passages that revealed the following results (Figure 4):

Figure 4: Ranking of coded passages extracted from the responses from study group participants to the question “When you reflect on your answers to this survey again in 6 months, what do you hope you have learned, changed, or accomplished? What aspects or skills are you eager to explore or enhance moving forward?”

Figure 4: Ranking of coded passages extracted from the responses from study group participants to the question “When you reflect on your answers to this survey again in 6 months, what do you hope you have learned, changed, or accomplished? What aspects or skills are you eager to explore or enhance moving forward?”

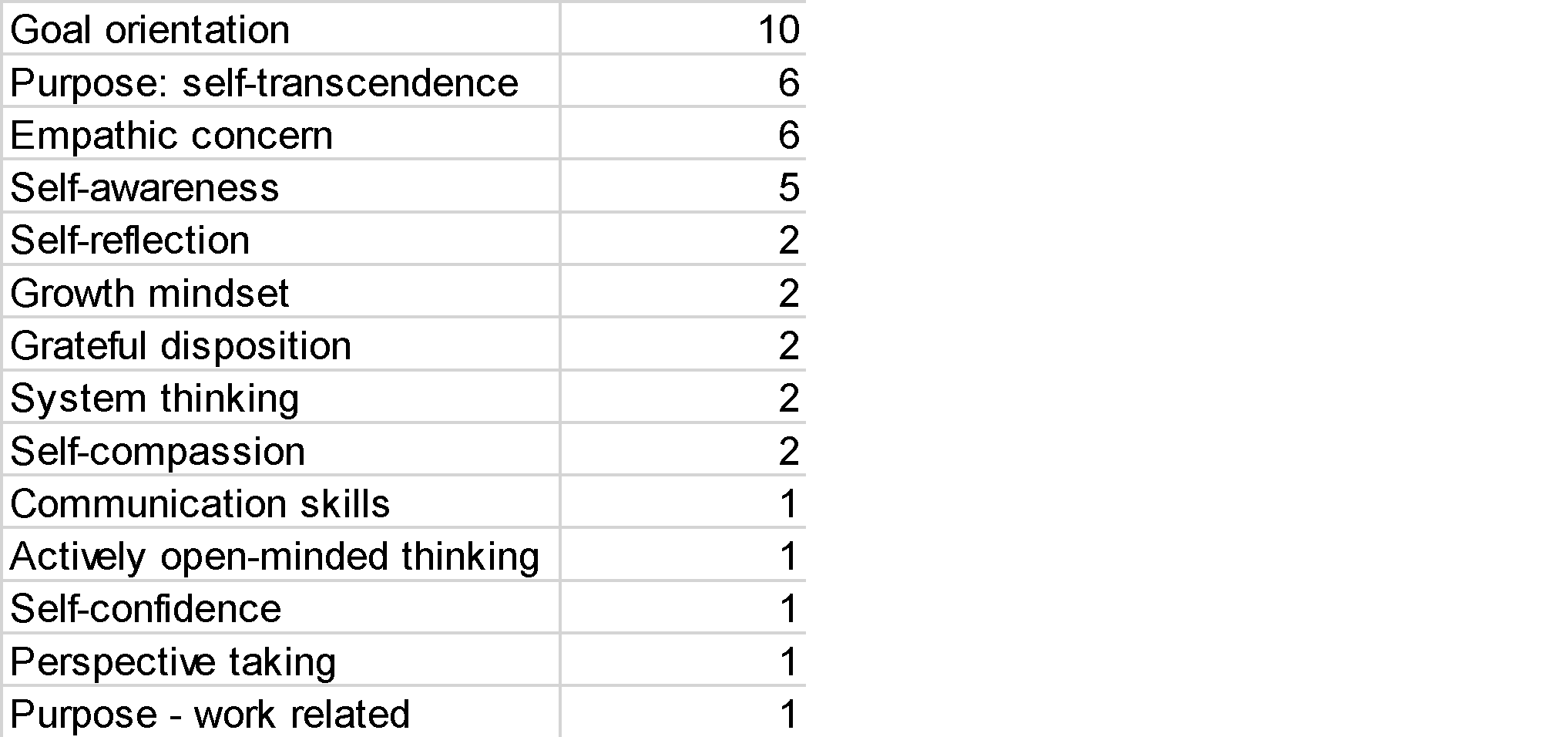

The same analysis carried out for the responses from the control participants resulted in 42 coded passages and revealed a different pattern (Figure 5):

Figure 5: Ranking of coded passages extracted from the responses from control group participants to the question “When you reflect on your answers to this survey again in 6 months, what do you hope you have learned, changed, or accomplished? What aspects or skills are you eager to explore or enhance moving forward?”

Figure 5: Ranking of coded passages extracted from the responses from control group participants to the question “When you reflect on your answers to this survey again in 6 months, what do you hope you have learned, changed, or accomplished? What aspects or skills are you eager to explore or enhance moving forward?”

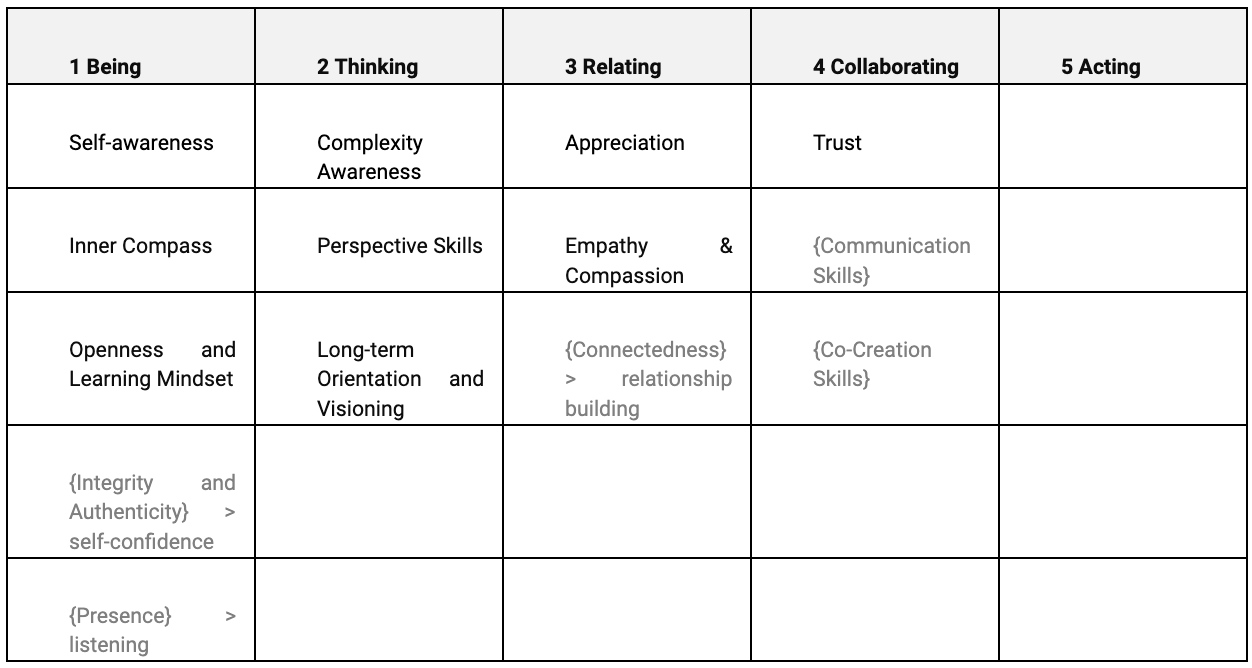

This additional analytical lens confirmed the lower level of engagement of the control group respondents when compared to the study group participants in terms of length and depth, which leads to the hypothesis that the use of Rflect might have stimulated not only a greater level of engagement during the semester, but also future intentions to keep working on their personal development. In addition, it is interesting to observe that additional skills categories than the ones tested were mentioned in the respondents’ outlook into their future personal development path, suggesting opportunities for the further development of Rflect and enhancement of the scope of the pre- and post-self-assessment, when comparing this set of skills with the IDG framework, as shown in the table below (Figure 6):

Figure 6: IDG skills already present in the Rflect assessment at the time of the study (in black) and those not yet present (in grey) but identified through the qualitative analysis.

Figure 6: IDG skills already present in the Rflect assessment at the time of the study (in black) and those not yet present (in grey) but identified through the qualitative analysis.

Innovating Rflect’s Assessment Feature

As for Rflect’s own future outlook in terms of product development, as a result of this study, some of the above-mentioned potential improvements had already been implemented while this paper was being concluded. For example, new competences have been added to the self-assessment for autumn semester 2024 and will continue to be added as soon as additional academic scales are discovered, with the goal of including all of the 23 IDGs in the near future. The current version of the assessment now includes the following additional dimensions (now including the IDG dimension of Acting as well):

- Being - Integrity and Authenticity

- Thinking - Critical Thinking

- Thinking - Long-term Orientation

- Relating - Self-compassion

- Collaborating - Communication Skills

- Acting - Optimism

- Acting - Perseverance

Concluding Remarks

In this study, the methodological rigour was enhanced by the quantitative approach using evidence-based scales, supported by a multi-stage qualitative content analysis, and by introducing the control group, ensuring a higher level of methodological rigour and alignment with established academic standards. Also, the sample size increased to 94 students, compared to the 39 respondents in the preliminary study. Finally, demographic data such as age, gender identity, journaling practices, were collected to understand potential correlations between these factors and skills development outcomes, and we aim to extend the measure and collect more data to be able to analyse demographic differences and further correlations in future studies where the sample size proves more significant.

This study serves as an additional step towards making Rflect more evidence-based and will be followed by further studies aimed at iterating Rflect’s science base by evolving its samples, data collection design and significance, and ultimately its scientific rigour.

About Rflect

We started Rflect because we believe that inner development is key to achieving sustainable development, and that students genuinely deserve better. Simply put, we are convinced that more reflection leads to better outcomes for both people and the planet. Our mission is to enable universities to equip their students with a lifelong habit of inner development. We understand this goal is ambitious, and we take pride in that ambition. Our motivation comes from the vision of bringing inner development to 200 million tertiary students worldwide and supporting universities in redefining their pivotal role in society. Rflect is an early stage edtech startup in Switzerland and is enabled by the Migros Pioneer Fund. Learn more: rflect.ch .

Imprint

This report was provided by Rflect in collaboration with academic advisors. Have questions? Get in touch: info@rflect.ch

Bibliography

Inner Development Goals (2021)

Rflect et. al. (2024) Preliminary Insights into Student Skills Development

Footnotes

-

https://innerdevelopmentgoals.org/framework/ (Accessed 23.07.2024) ↩

-

Q1: “What are you learning or becoming more aware of about yourself after completing this assessment? Were there any surprises or unexpected insights?” ↩